Using Database Triggers for Caching Counts

This is a story

all about how

my counts got flipped

turned rightside down

Today’s post looks at ways to improve an application by leaning more heavily on the database to manage data. Specifically we look at using database triggers for derived inventory counts, simplifying the application code and solving a deadlock along the way.

Moving Data Integrity into the Database

Some of the most satisfying code cleanups I’ve done recently have involved reinforcing data integrity checks from the application down into the database. Modern MySQL (and old Postgres) have plenty of options for add data constraints ranging from a simple NOT NULL to more complex constraints and they are generally rock solid.

Database-level constraints like these are useful because they run no matter what, even if your application validation is bypassed.

- they run if you’re doing bulk

INSERT INTO target (something) SELECT else FROM external_datafrom the command line - they run if you’re writing things like

UPDATE target SET something = (SELECT else), - they run even if you’re manually editing rows like a maniac 👺

Most times I’ll keep the application-level constraints as well.

Here’s an example using MySQL 8 check constraints as well as application-level validation (in Rails in this case). Both types of constraint help ensure items.quantity_available never goes below 0:

diff --git a/app/models/item.rb b/app/models/item.rb

+ validates :quantity_available, numericality: { only_integer: true, greater_than_or_equal_to: 0 }diff --git a/db/migrate/20210101000000_add_inventory_check_constraints.rb b/db/migrate/20210101000000_add_inventory_check_constraints.

+class AddInventoryCheckConstraints < ActiveRecord::Migration[6.1]

+ def change

+ add_check_constraint :items, "quantity_available >= 0", name: "chk_items_minimum_quantity_available"

+ end

+end

diff --git a/db/structure.sql b/db/structure.sql

CREATE TABLE `items` (

+ CONSTRAINT `chk_items_minimum_quantity_available` CHECK (`quantity_available` >= 0)In this example we mirror the constraint (greater than or equal to zero) in both application code and in the database. This redundancy gives us a few nice things:

From the application-level constraint we get:

- nice error messages to display to the user (database constraint errors are usually pretty ugly)

- easy-to-find validation rules for other developers when they’re browsing the application code (they don’t need to check the database schema)

From the database-level constraint we get:

- a guarantee that any manual changes, data imports or database-level calculations will never create invalid data

- rules that will be enforced if any other applications start working with our database

This is all pretty straightforward. The rest of this post is going to look at using the database to actually create and mange valid data within the database itself.

UPDATE constraints SET volume = 11

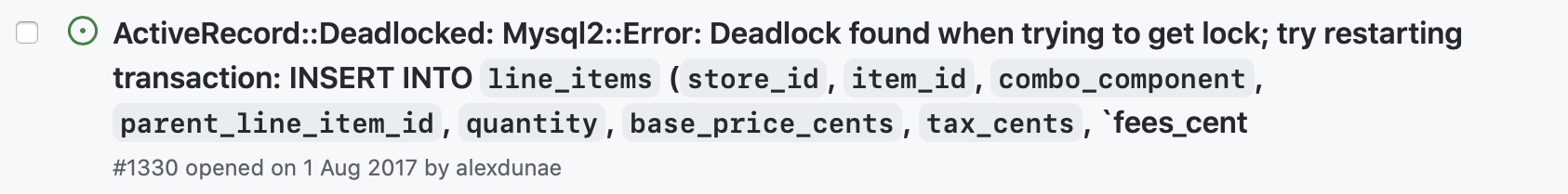

The saddest entries I see in my bug tracker are: Mysql2::Error: Deadlock found when trying to get lock; try restarting transaction. They mean that there’s a database deadlock and I know we’re in for some tedious and delicate Heisenbug hunting to trace down the exact set of conditions that caused the database to lock. Yuck.

In one case case – issue #1330 from August 1, 2017 in the bug tracker - it became clear the deadlock was caused by our inventory updating code.

The application generates tickets for live events, with a limited number of each ticket type available. In order to have a 100% accurate inventory count we queried the inventory immediately before adding any items to an order.

This sort of code would run many times per second:

START TRANSACTION;

-- get quantity immediately before adding items to an order to ensure 100% accuracy

SELECT SUM(quantity) FROM line_items WHERE item_id = 'item1';

-- insert if available

INSERT INTO line_items (order_id, item_id, quantity) VALUES ('order1', 'item1', 2);

COMMIT;Here’s the problem: SELECT SUM... would lock the line_items table at the same time as another purchaser was trying to INSERT line_items, creating deadlocks.

We needed a solution that would not run SELECT SUM... in the middle of the critical path of our application.

Cache it?

The first fix we tried to get rid of SELECT SUM... involved caching the inventory counts in Redis. This had potential but our implementation was never solid (a story for another day). We eventually moved on to our current solution: cache the inventory counts directly in the database using triggers.

Trigger happy

A database trigger is…

…a special stored procedure that is run when specific actions occur within a database. Most triggers are defined to run when changes are made to a table’s data …

You can write triggers that automatically run after INSERT, UPDATE or DELETE actions. Like constraints, they’re guaranteed to run no matter how you interact with your database (even manual editing like a maniac 👺). The trigger gets the value of the rows before and after your change, and you can use both old and new values to write some more SQL of your choosing.

Importantly, if a trigger fails it rolls back the entire operation so we don’t need to worry about data getting out of sync.

The new design

The system we landed on to keep our inventory counts in sync worked like this:

- listen for any change to the

line_itemstable - when a change is triggered, increment or decrement the related

items.quantity_soldfield by the amount inline_items.quantity

This meant no more SELECT SUM.... Just simple + and -.

Step: Add an inventory count column to items table and model

This column is read-only to the application and will only be updated by the triggers.

diff --git a/app/models/item.rb b/app/models/item.rb

class Item < ApplicationRecord

+ attr_readonly :quantity_sold

enddiff --git a/db/migrate/20210101000000_add_quantity_sold.rb b/db/migrate/20210101000000_add_quantity_sold.

+class AddQuantitySold < ActiveRecord::Migration[6.1]

+ # add counter column to the Items table - this will only be edited by the trigger

+ def change

+ add_columns :items, :quantity_sold, :integer, default: 0, null: false

+ end

+end

diff --git a/db/structure.sql b/db/structure.sql

CREATE TABLE `items` (

+ `quantity_sold` int NOT NULL DEFAULT '0',Step: Create database triggers for each operation

-- the "AFTER INSERT" trigger

-- simply increments the value of quantity_sold (or decrements if the NEW.quantity is negative)

-- we always update timestamps, too, but you may not need to

CREATE TRIGGER `line_items_after_insert_row_tr` AFTER INSERT ON `line_items`

FOR EACH ROW

BEGIN

UPDATE items

SET quantity_sold = (quantity_sold + COALESCE(NEW.quantity, 0)),

updated_at = UTC_TIMESTAMP(6)

WHERE id = NEW.item_id;

END--- the "BEFORE DELETE" trigger

CREATE TRIGGER `line_items_before_delete_row_tr` BEFORE DELETE ON `line_items`

FOR EACH ROW

BEGIN

UPDATE items

SET quantity_sold = (quantity_sold - OLD.quantity),

updated_at = UTC_TIMESTAMP(6)

WHERE id = OLD.item_id;

END--- the "BEFORE UPDATE" trigger

--- this is a special guard to forbid changing a line item's ITEM_ID once it has been set

--- adding that restriction dramatically simplified our trigger code and fits logically with

--- how our system works. If you find your triggers getting crazy feel free to set your own guards

--- to limit the possible states your data can be in.

---

--- For MySQL the easiest way to signal an error is with SIGNAL SQLSTATE

--- https://dev.mysql.com/doc/refman/8.0/en/signal.html

CREATE TRIGGER `line_items_before_update_row_tr` BEFORE UPDATE ON `line_items`

FOR EACH ROW

BEGIN

IF NEW.item_id <> OLD.item_id OR (NEW.item_id IS NULL) <> (OLD.item_id IS NULL) THEN

SIGNAL SQLSTATE '45000' SET message_text = 'Changes to LineItem#item_id are forbidden';

END IF;

END-- the "AFTER UPDATE" trigger

-- in order to reduce un-necessary update calls this trigger only runs

-- if an update actually changes the quantity

-- it also uses COALESCE to ensure our NEW.value is non-null

CREATE TRIGGER `line_items_after_update_row_tr` AFTER UPDATE ON `line_items`

FOR EACH ROW

BEGIN

IF NEW.quantity <> OLD.quantity THEN

SET @delta_quantity_sold = (COALESCE(NEW.quantity, 0) - COALESCE(OLD.quantity, 0));

UPDATE items

SET quantity_sold = (quantity_sold + COALESCE(@delta_quantity_sold, 0)),

updated_at = UTC_TIMESTAMP(6)

WHERE id = NEW.item_id;

END IF;

ENDStep: Add tests

It’s important to be able to run your tests against the same version of database that you run in production. That’s a good practice anyways, and thankfully Rails and modern CI systems make that super easy.

Because this code was going to be largely out-of-sight, we added a decent number of unit tests to ensure every edge case results in proper counts.

Did it work? Ya!

This system has been running without issue for several years now and is rock solid. There are no more deadlocks since we no longer run SELECT SUM... and so are putting way less presure on the line_items table.

Moving such crucial code out of the application layer was nerve-wracking. This post began by talking about how great it was to have constraints at both the application and database levels. Well, this change moved the responsibility for data integrity entirely out of the application and into the database.

We knew the database would do a good job but we were worried about the developer experience. Would the triggers be hard to work with? Would they fail and be hard to debug? Would the math be wrong and we wouldn’t know why? Would we forget how they worked?

It also just seemed too simple. We were used to always running SUM on the entire data set. This just did puny little + and - operations on a single row at a time, relying on the atomicity of the database engine.

Happily, our worries have not come true. Triggers have been great. I think the reason triggers worked so well is that we chose a stable, rarely-changing and narrowly-scoped slice of our application. I wouldn’t want triggers in a chunk of code that changed frequently.

A few Rails specifics

This strategy works for any tech stack. We use Rails, which give us a few niceties. The main one is that Rails stores the database migrations directly beside application code, keeping things in sync. We don’t have dedicated DBAs, so the same people write SQL and Ruby, allowing for super close coordination.

The other is that Rails makes it easy to spin up and destroy test databases using the same database engine we use in production. This is key for getting good test coverage of our triggers.

We manually write the trigger modifying code in our Rails migrations. We looked at using the hair_trigger gem but I don’t think it played well with MySQL way back then. It might now. In any case, this code changes so rarely it’s not a big deal.

Further Reading

This blog post by Evil Martians provides some great info about using database triggers.

MySQL bug #11472 about foreign keys and triggers holds a dear place in many people’s hearts. Opened in 2005 it still persists unfixed. People stop by to wish it happy birthday now.

The End

Thanks to Corey Maas, Nico teWinkel and Yosuke Hasumi for reading drafts of this post.

And thank you for reading it!

I'd love to hear any feedback you might have about this post. Tweet me up at @mrmrbug or email me at code@dunae.ca.

You can also grab a little bit of RSS or check out the rest of the blog.